AI will not be our god. If it brings our demise, it will be because of human greed and stupidity.

I wrote somewhat extensively about how generative AI’s are trained a few months ago. But here’s a three bullet point recap.

- Generative AI, whether text, audio or images requires a massive amount of training data.

- The training data, is almost always created by humans who receive no compensation for their work.

- Most business models for generative AI compete with human creators.

Stolen Ideas

Not all AI training involves fundamental intellectual property infringement. At least one large company in this space is reportedly only using licensed stock images, and compensating artists; thanks Adobe. Also, the public domain exists, but that’s not how most generative AI is being trained.

Training a generative AI takes huge archives of images and text. Adobe aside, most AI training data is “ripped from the net,” as the kids say.

At least two large class action lawsuits are pending each against multiple companies in this new AI space 1, 2 over the use of copyrighted works. Separately Getty Images is suing Stability AI. That’s hardly an exhaustive list of pending AI, IP, litigation.

Even without the conclusion of those lawsuits, artifacts of the human data stolen to build machines keep appearing.

Wired traced an AI writing assistant’s use of a fanfiction sex trope to the original source. I highlighted a time when ChatGPT gave incorrect information from a single source. Futurism found examples of plagiarism in CNET’s AI “journalism” experiment.

Replacing Humans

Many of the use cases for generative AI are clearly replacing human creators. Besides CNET, Buzzfeed, and Men’s Journal have been running AI generated articles already. It’s hard to argue these uses of AI aren’t poised to replace human writers, if not already replacing them.

Writing, like nearly all creative work, does not in and of itself generate money. Something, books, subscriptions, tickets, advertising, and so forth must be sold to turn writing into cash. So publishers, media executives, and the like exist, making money from writing (and other creative works).

In an ideal world writers and business people would have a symbiotic relationship. We are not in an ideal world. To paraphrase The Beatles we all live in an ad supported pyramid scheme.

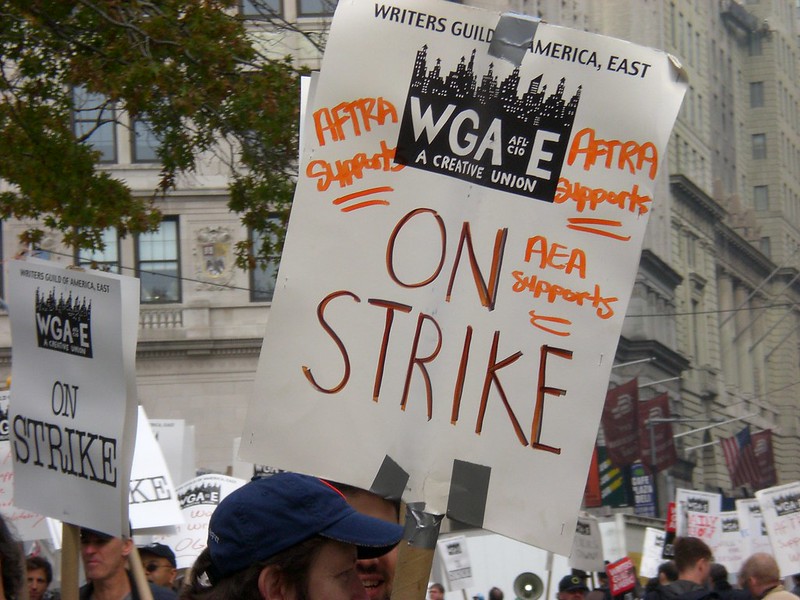

The model of running ads against eyeballs creates a disparity between how business people, and writers feel about content. Writers value writing. The business perspective views writing as simply content units. Preventing AI from replacing writers is one of the core reasons for the current writers strike.

It is common for business folks to assume more content units must equate to more money. The business goal becomes creating content units that are good enough, for as low a cost as possible. Shortly before Buzzfeed News shuttered, staff of Buzzfeed’s News division were told to focus on increasing the volume of stories published each day.

The content units mindset makes AI replacing human writers inevitable. This AI future won’t just replace writers, when the goal is churning out more content units, ever cheaper, every creative is on the chopping block. The sword that will cut their jobs is being forged with their work.

Unseen Harm

The writers on strike have made themselves known. They have a collective voice, and we hear it. AI has voiceless victims as well.

Big Technology published an article about Richard Mathenge and others who worked on ChatGPT as part of the team who labeled explicit content. From the article,

The type of work Mathenge performed has been crucial for bots like ChatGPT and Bard to function — and feel magical — yet it’s been widely overlooked. In a process called Reinforcement Learning from Human Feedback, or RLHF, bots become smarter as humans label content, teaching them how to optimize based on that feedback. AI leaders, including OpenAI’s Sam Altman, have praised the practice’s technical effectiveness, yet they rarely talk about the cost some humans pay to align the AI systems with our values. Mathenge and his colleagues were on the business end of that reality.

Mathenge and a Kenya-based data labeling team, managed by U.S. firm Sama, were paid about $1 per hour. OpenAI told Big Technology it believed it was paying its Sama contractors $12.50 per hour.

The situation these human workers dealt with isn’t without coverage. Time published an investigation into the Kenyan data labelers earlier this year that received a lot of attention. Earlier this month NBC News covered these and U.S. workers in a similar situation.

Still, articles hyping up generative AI, and even the “we spoke to a chatbot” genre of reports, far outnumber those talking about the human cost of building artificial intelligence.

Predatory Business

Grief Tech is a category of AI, billing itself as helping people cope with the loss of a loved one. For KnowTechie I wrote about how predatory I find this use case. It’s exploiting emotionally vulnerable people the way séances always have.

Grief Tech is a science fiction twist on fraud as old as history. Other classical grifts are also getting a modern spin thanks to AI. Impersonation scams, where scammers pretend to be a trusted entity have been super powered by AI.

The Federal Trade Commission issued a consumer alert that scammers could target people with the voice of a loved one. That’s not a hypothetical problem. AI-generated voices or voice changers have been used in serious frauds and scams.

Back in 2019 criminals used these voice changing tools to scam $243,000 out of a U.K-based energy firm. In 2020, a manager of a Japanize frim in Hong Kong was tricked into making several large transfers by someone using this technology.

A man in Texas said his father was scammed out of $1,000 by someone using an AI voice. Collectively eight senior citizens in Canada have been taken in by scammers impersonating loved ones in crisis to the tune of a combined $200,000. And most disturbingly, a woman in Arizona was reportedly asked to pay a $1 million ransom for her daughter’s safe return, by scammers who faked her daughter’s voice.

The Washington Post reported that in 2022 the FTC received over 36,000 reports of people swindled by someone presenting themselves as a friend or family member. Over 5,100 of those incidents happened over the phone.

Loved ones and known service providers are not the only people scammers impersonate. It is disturbingly easy to impersonate a celebrity using AI. A song called “Heart on My Sleeve” featuring the AI generated voices of Drake and the artist formally known as The Weekend recently went viral.

Scammers are using digital ads featuring celebrities. I wrote about scam ads impersonating MrBeast. Verifythis reported on ads impersonating Joe Biden promoting a fake stimulus program.

The Wall Street Journal covered several uses of deepfake public figures in advertising. When a deepfake is used without authorization, celebrities’ have had success in court. But digital ads aren’t a Superbowl commercial, and they can fly below the radar.

This technology is wild, and already out of the Pandora’s box. No amount of self-serving calls for AI regulation or actual regulation can reset things to zero.

Article by Mason Pelt of Push ROI. First published in MasonPelt.com on May 26, 2023. Photo: “Wall Street writers strike.” by kona99 is licensed under CC BY-SA 2.0.